5 months, 5 months I’ve been working on fixing my netcode. I finally understand what you have to do to get a decent deterministic simulation between clients and servers. In my defense on why this took so long, I’ve been spending a lot of time playing the Blue Prince and Clair Obscur: 33. However, after much debugging, crying, and log file reading, I’m done.

This post will (hopefully) finalize and detail exactly what needs to be done to have clients and servers agree on where a player should be. This post is going to cover some of the bugs and design issues I encountered. I’ll wrap everything up in a concise manner at the end of this post if you want to skip to that instead.

Simulations

In my previous post I talked about using an external network simulation tool. Unfortunately, the one recommended to me didn’t work. I looked for another tool and found rs-lag which I thought worked, until I realized it was corrupting my packets to the point that I couldn’t properly decrypt them. I did play around with writing a simulation class directly in C++ but it was too complicated due to how my threading code worked. In the end, I decided to write my own proxy in Go.

The two primary conditions I care about are latency and packet loss. I built this proxy server to pass on the bytes directly to a channel and call into a series of go routines to sleep for whatever latency I specified. This worked quite well and I ended up finding some pretty silly bugs in my netcode.

Bugs & Design Problems

My first major realization was that the client doesn’t need a jitter buffer, only the server. The client simply needs to play packets as they come in, as the server knows the last acknowledged ID of the client and will send in whatever missing data the client needs.

The clients are just predicting anyways, so it doesn’t need to be directly in sync with the server on each frame. We can afford to lose a lot of frames and just check in every once in a while for desyncs. Only downside to this is the larger the distance, the more frames we need to re-simulate.

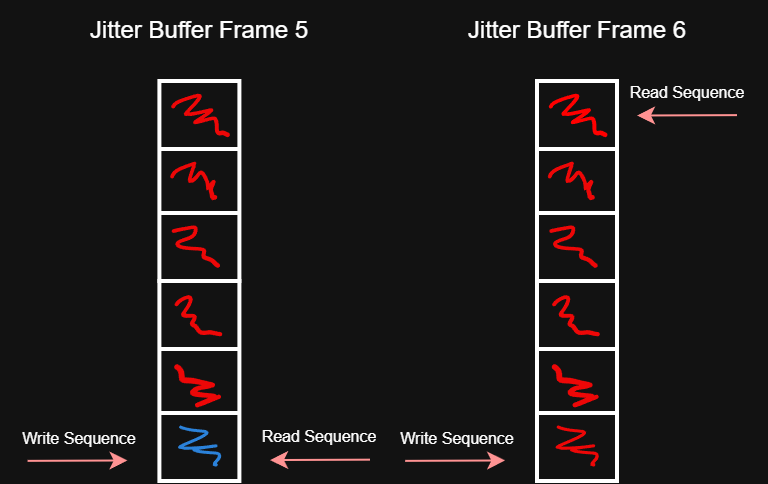

When using the client jitter buffer, I thought I was being smart and throttling the FPS but it turns out this was not only totally unnecessary, it failed to work after about 120ms of lag. After that point, my reads were starting go faster than the writes (messages from the server) so my buffer would get corrupted. As a refresher here’s that problem visualized:

Figure 1. Jitter buffer being read too fast and starting to read past the write sequence

Server to Client Packet Loss

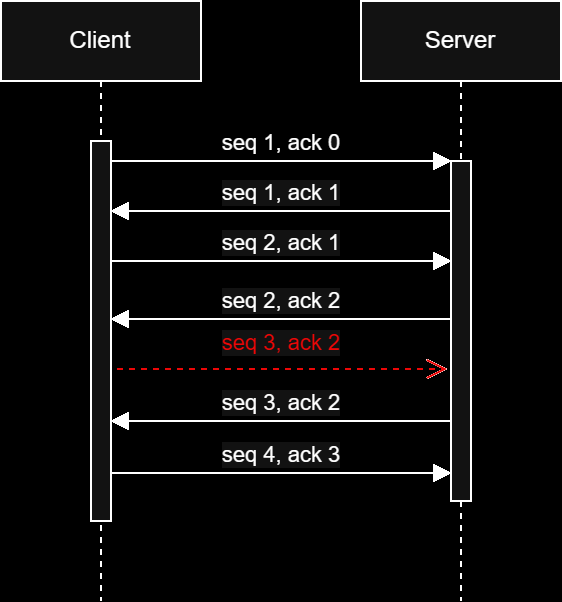

From the client’s perspective, I only need to really check the server’s view of the clients transform from the point of the last acknowledged client ID. Let’s play out the scenario of when a packet gets dropped from the server.

Figure 2. A packet gets lost on the way from the server back to the client.

We handle this situation by tracking our last acknowledged IDs. assuming we have two frames that execute on the client between seq 2, ack 1 and seq 3 ack 1. We would normally get a desync, as during seq 2 we would have ‘old’ or missing data. To handle this case, we skip any sort of desync check and assume we’ll get updated values in the next packet. Once seq 3, ack 3 comes in from the server, we THEN compare the transforms and ensure they are correct between the server and the client.

What happens if a lot of packets get dropped? In this case we don’t immediately check for desync’s, we wait until the network stabilizes and we have an acknowledgement ID come in that’s exactly 1 greater than the last one we saw. This logic can be found in PMOWorld::UpdateCurrentPlayer:

// Update our last known server state ack id, to be sent in next input packet

auto LastAckdServerId = ServerState->State.SequenceId;

ServerState->SetSequenceId(SequenceId);

if (ServerState->bFull)

{

Player.add<network::NetworkReady>();

}

Log->Debug("Desync Check Server: {} Last {}", ServerState->LastClientAckId, LastClientAckId);

bool bSameAckId = ServerState->LastClientAckId == LastClientAckId;

bool bTooDistant = math::Distance(LastClientAckId, ServerState->LastClientAckId, network::MaxSequenceId) > 1;

ServerState->LastClientAckId = LastClientAckId;

// do not bother checking for desyncs until:

// - Our state buffer is full

// - We had a dropped packet and we've already processed this last client id

// - We had many dropped packets and the gap is too large

if (!ServerState->bFull || bSameAckId || bTooDistant)

{

return;

}

// determine if server and client mismatched

auto PastPos = ClientState->State.PositionAt(LastClientAckId);

auto PastRot = ClientState->State.RotationAt(LastClientAckId);

auto PastVel = ClientState->State.VelocityAt(LastClientAckId);

auto ServerPos = ServerState->State.PositionAt(SequenceId);

auto ServerRot = ServerState->State.RotationAt(SequenceId);

auto ServerVel = ServerState->State.VelocityAt(SequenceId);

if (PastPos != ServerPos || PastRot != ServerRot || PastVel != ServerVel)

{

// desync occurred

// ...

}

If the gap is too large, I noticed desyncs were much more likely to occur because state buffers indexes (which are just sequence ID used as offsets) weren’t aligned properly. By simply checking only if the LastClientAckId is one greater than the last one we had seen, then the offsets always lined up and I got no desyncs as we are comparing the correct relative states in our histories. It also makes sense to not bother checking for desyncs when the network is acting up.

Client to Server Packet Loss

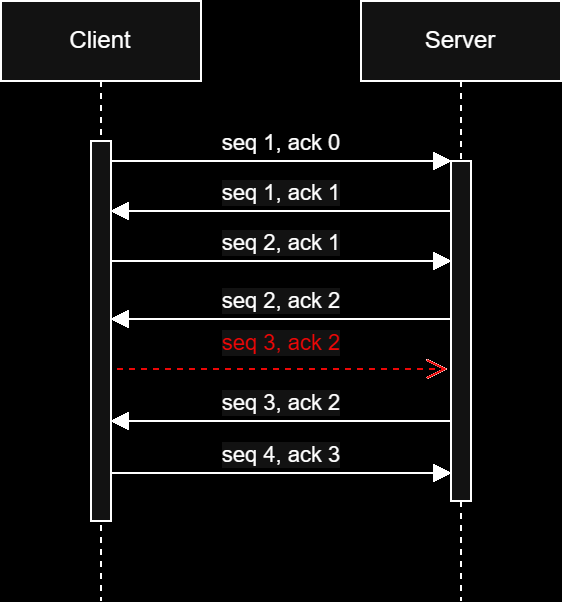

So that covers packets being dropped from server to client, but what about the reverse, when a client packet gets dropped?

Figure 3: A packet getting lost from client on the way to the server.

Before we go into detail on how I handle this situation, let’s take a trip down memory lane to how my input system was originally designed. I use a history buffer and send previous inputs, so if we had any dropped packets the server could use that data.

Funny enough, I threw away this original history buffer implementation as I thought I wouldn’t need it! But once I realized packet loss needs to be handled better, I enhanced it to be dynamic.

Now instead of always sending back 24 or whatever inputs. I look at the last sequence ID the server sent me, look at my current sequence ID, then calculate how many entries the server could actually use/read if packets were dropped in between when I send this packet and other packets I already sent.

Here is the client code that builds out that historical input buffer on each tick.

// Create input history from ServerState.State.SequenceId to ClientState.State.SequenceId

const uint8_t NumHistoryRecords = math::Distance(ServerState.LastClientAckId, ClientState.State.SequenceId, network::MaxSequenceId);

Logger->Info("Server: {}, Client: {}, LastAckClient: {}", ServerState.State.SequenceId, ClientState.State.SequenceId, ServerState.LastClientAckId);

auto KeyPressesHistory = DynamicHistoryBuffer<uint32_t>(NumHistoryRecords);

auto PitchHistory = DynamicHistoryBuffer<uint16_t>(NumHistoryRecords);

auto YawHistory = DynamicHistoryBuffer<uint16_t>(NumHistoryRecords);

for (uint8_t Offset = 0; Offset < NumHistoryRecords; Offset++)

{

auto Idx = (ClientState.State.SequenceId+network::MaxSequenceId-Offset) % network::MaxSequenceId;

auto Inputs = ClientState.InputAt(Idx);

KeyPressesHistory.Push(Inputs.KeyPresses.KeyPresses);

PitchHistory.Push(Inputs.PitchAngle);

YawHistory.Push(Inputs.YawAngle);

Logger->Info("PreUpdate: History (Idx: {}) KeyPress: {}, Yaw: {}, Pitch: {}",

Idx, Inputs.KeyPresses.KeyPresses, Inputs.YawAngle, Inputs.PitchAngle);

}

// ...

KeyPressesHistory.CompressBuffer(KeyPresses, KeyPressCompressionKey);

PitchHistory.CompressBuffer(Pitch, PitchCompressionKey);

YawHistory.CompressBuffer(Yaw, YawCompressionKey);

// ... do flat buff serialization & send packet

On the server side we do some interesting work with this data. As a refresher, the server uses a jitter buffer to read from a queue and have a number of packets in the backlog. This works great provided no packets get lost.

To handle packets being lost, we check if the incoming sequence id is not the next expected id. If it isn’t we had dropped a packet as there’s now a gap. We then fill the gap with the historical input data so when the jitter buffer is read from, it has this filled in data from our history. First lets show the input data getting processed before inserting it into the jitter buffer:

auto HistoryBuffer = std::make_unique<network::ServerInputHistory>(NumHistoryRecords);

// New buffer created and can replay the last N inputs (where N == HistoryBuffer->NewInputs)

HistoryBuffer->KeyPresses.LoadCompressed(IncomingKeyPresses->begin(), IncomingKeyPresses->end(), IncomingKeyPressesKey);

HistoryBuffer->Yaw.LoadCompressed(IncomingYaw->begin(), IncomingYaw->end(), IncomingYawKey);

HistoryBuffer->Pitch.LoadCompressed(IncomingPitch->begin(), IncomingPitch->end(), IncomingPitchKey);

auto KeyPress = HistoryBuffer->KeyPresses.ReadFromOffset(NumHistoryRecords-1);

auto Yaw = HistoryBuffer->Yaw.ReadFromOffset(NumHistoryRecords-1);

auto Pitch = HistoryBuffer->Pitch.ReadFromOffset(NumHistoryRecords-1);

auto NewInput = network::Inputs{input::Input(KeyPress), Yaw, Pitch, false};

Logger->Debug("Insert Buffer {} ServerSeqAckId: {} clientSeqAckId: {}, ClientTime: {}, Input: {} Yaw: {} Pitch: {} (NumHistory: {})",

SequenceId, Command->server_seq_id_ack(), Command->client_seq_id(), Command->client_time(),

NewInput.KeyPresses.KeyPresses, NewInput.YawAngle, NewInput.PitchAngle, NumHistoryRecords);

auto Buffer = PlayerEntity.get_mut<ServerJitterBuffer>();

Buffer->Insert(*Logger, SequenceId, Command->server_seq_id_ack(), Command->client_seq_id(), Command->client_time(), NewInput, std::move(HistoryBuffer));

if (!PlayerEntity.has<network::NetworkReady>() && Buffer->bReady)

{

PlayerEntity.add<network::NetworkReady>();

}

The Buffer->Insert code handles looking for these gaps / lost packets and then writes to our jitter buffer using the historical inputs.

void ServerJitterBuffer::Insert(logging::Logger &Log, uint8_t SequenceId, uint8_t ServerAck, uint8_t ClientAck, uint64_t ClientTime, network::Inputs& Input, std::unique_ptr<network::ServerInputHistory> History)

{

SequenceCounter++;

if (SequenceId != WriteSequenceId+1)

{

// looks like we lost some packets, fill in our entries with the data from history

auto Missing = SequenceId - WriteSequenceId;

Log.Debug("{} Packet(s) were dropped ({} - {}, {})", Missing, SequenceId, WriteSequenceId, static_cast<uint8_t>(WriteSequenceId+1));

for (int i = History->Size-1; i >= 0; i--)

{

Log.Debug("History (Seq: {}) Yaw: {}", i, History->Yaw.ReadFromOffset(i));

}

uint8_t HistoryIdx = History->Size-1;

for (int i = Missing-1; i >= 0; i--)

{

auto Idx = (WriteSequenceId+i) % JitterSize;

HistoryIdx--;

if (HistoryIdx < 0)

{

Log.Warn("Potential corruption of jitter entries due to dropped packet history size");

break;

}

auto KeyPress = History->KeyPresses.ReadFromOffset(HistoryIdx);

auto Yaw = History->Yaw.ReadFromOffset(HistoryIdx);

auto Pitch = History->Pitch.ReadFromOffset(HistoryIdx);

auto Input = network::Inputs{input::Input(KeyPress), Yaw, Pitch};

Entries.at(Idx) = std::make_unique<ServerJitterData>(Input, 0, 0, 0, false);

}

}

WriteSequenceId = SequenceId;

Entries.at(WriteSequenceId % JitterSize) = std::make_unique<ServerJitterData>(Input, ServerAck, ClientAck, ClientTime, false);

if (!bReady && SequenceCounter > BufferReadyAt)

{

bReady = true;

}

}

After we handle any missing elements we add in the actual sequence ID data with the input that is expected for this packet.

And that’s it! Now we can lose a number of packets from our client and the server can still process it as long as the historical data comes in faster than our read sequence ID. Since we have a buffer of about 4-5 packets between when we write and when we read, we can lose around 30-40% of our packets and still process the clients inputs as if nothing happened!

Small jitter buffer bug

A silly bug I encountered was every once in a while my jitter buffer was really small (only 1-2 packets in queue). Since I use sequence IDs as indexes into the buffer, I noticed on start of a client sometimes my sequence IDs would reset to 0, meaning the 3 or 4 packets I just put into the buffer would get overwritten, leaving me with little wiggle room if packets get dropped.

This was evident by the following logging:

wirepair@DESKTOP-C1TQ319:~/pmo/build/Debug$ grep "Current Seq" client.log

[2025-05-10 19:22:23.908] [test] [warning] Current Seq: 0

[2025-05-10 19:22:23.941] [test] [warning] Current Seq: 1

[2025-05-10 19:22:23.974] [test] [warning] Current Seq: 2

[2025-05-10 19:22:24.008] [test] [warning] Current Seq: 3

[2025-05-10 19:22:24.042] [test] [warning] Current Seq: 0

[2025-05-10 19:22:24.076] [test] [warning] Current Seq: 1

[2025-05-10 19:22:24.109] [test] [warning] Current Seq: 2

[2025-05-10 19:22:24.144] [test] [warning] Current Seq: 3

This was a real head scratcher as I traced my code and never reset my sequence IDs or cleared any values. I had a hunch my entire reliable endpoint class was being copied/destroyed or something. To test this I defaulted to the age old printf pointer debug method:

printf("addr of Endpoint: %p\n", (void *)EndpointInstance);

After running, my terminal was graced with the following output:

addr of Endpoint: 0x7fa2ac000c30

addr of Endpoint: 0x7fa2ac000c30

addr of Endpoint: 0x7fa2ac000c30

addr of Endpoint: 0x7fa2ac000c30

addr of Endpoint: 0x7fa2ac005600

addr of Endpoint: 0x7fa2ac005600

addr of Endpoint: 0x7fa2ac005600

Tracing where I created these endpoint instances, I looked into the client.cpp and immediately noticed my mistake. If I receive another GenKeyResponse message from a server, I’ll re-create the Peer and overwrite my instance. A quick “if” check fixed this.

Logger.Info("Got server GenKeyResponse");

Peer = std::make_unique<ReliablePeer>(UserId, ListenerSocket, ListenerSocket->SocketAddr(), UserKey);

Three changesets that fixed everything

- fix adding from last ack, not last seq This was an important realization that the ‘diff’ from the server needed to account for adding the players locations from the lack acknowledged ID, not from the last sequence as we may have many dropped packets.

- remove client jitter buffer Also an important realization, client doesn’t need a jitter buffer as we only care about the latest updates from the server. Also the more lag we have, the higher chance the jitter buffer will start reading from it faster than the server is responding.

- reduce how often we need to check for desyncs The final nail in the ‘too many desyncs’ coffin. Turns out we should only really check for physic simulation desyncs when network conditions are stable. By enabling some debug printing I noticed when lots of dropped packets occurred, the client gets confused on which sequence ID it should check to compare if we are desynced. By only checking desyncs if the previous last acknowledged sequence ID is +1 of the incoming last acknowledged sequence ID, we have a much more stable network / physics simulation!

I can now run clients and servers with 200ms or more of latency and dropping 20-40% packets and get smooth network simulations without desyncs. I’m PRETTY SURE I’m done working on this code for a while, but I thought that last time and that was a month or two ago.

PMO Netcode Recap

Let’s break down exactly what I needed to get this all to work.

Server

- Send incrementing sequence IDs to each client, along with the sequence ID that was last seen from that client

- Create a jitter buffer and read in 4-5 packets before the server starts processing the clients packets in the world tick. This gives a nice bit of wiggle room for if/when packets get dropped

- Client should send historical inputs from the last acknowledged ID from the server. This allows the server to fill in the jitter buffer with previous input data that was dropped/lost.

- The Server DOES NOT do any rollback, throttling, or anything other than writing to the jitter buffer and then reading per-client in each tick.

- Given last received ID from client, create a delta of all things that have changed since the last received ID, and this current server frame. This includes the servers understanding of the players current location/rotation/velocity.

Client

- Send incrementing sequence IDs to the server, and responding with the last seen sequence ID of the server

- For each tick, store client state in a rolling buffer that is addressable by these sequence IDs, including:

- Any and all inputs

- Any values used in any part of the physics simulations (current velocity of the player etc)

- Physics state (JoltPhysics makes this dead simple)

- Don’t worry about other network actors as those are just interpolated from the server data.

- Read in server updates and process as they come in, don’t bother checking for desyncs until:

- At least 16-24 frames worth of data has been sent back and forth

- The last ack’d sequence ID is one away from the current incoming acknowledged sequence ID.

- If you use a delta, you’ll need to add the last ack’d ID when inserting transform data into our state buffers. I made a mistake and used the last sequence id, which was invalid. (This is complicated to explain, see the code)

- Compare the server’s incoming sequence ID transform data with the last client acknowledged ID. This ensures that they are both looking at the same states of the physics simulation of the same time. Be sure to compare the location, rotation and, if you are sending it, the velocity values, not just location!

- If you DO desync you’ll need to rollback:

- Rewind physics to the last client ack’d ID

- Teleport player back to where the server sees the player at that ID

- Check the distance between the last ack’d ID, and the current sequence ID of the client

- Iterate that amount of times (distance) and be sure to replay:

- The user input

- Run any prephysics updates

- Call physics update

- Store those newly created value (location/rotation/velocity) from the physics engine back into our client state buffer

It should be noted the above is all for UNRELIABLE UDP packets. I’m cheating quite a bit and treating them more like reliable packets, but a lot of the lower level stuff is handled by my port of reliable.io.

Summary

Well now that I feel a lot more confident in the netcode I can start looking at the combat system again! I’m excited, but I may take a quick break and beat Clair Obscur: 33 first. See you in the next post!