This is more of a brain dump post than an implementation devlog post. I want to write down my thoughts and figure out a series of improvements I can make to my netcode before I actually implement it. There are some pretty unique challenges that MMOs face that just aren’t a problem in other multiplayer games. Namely, we can’t send all data in a single packet per frame like we can with other online game types.

Our current setup

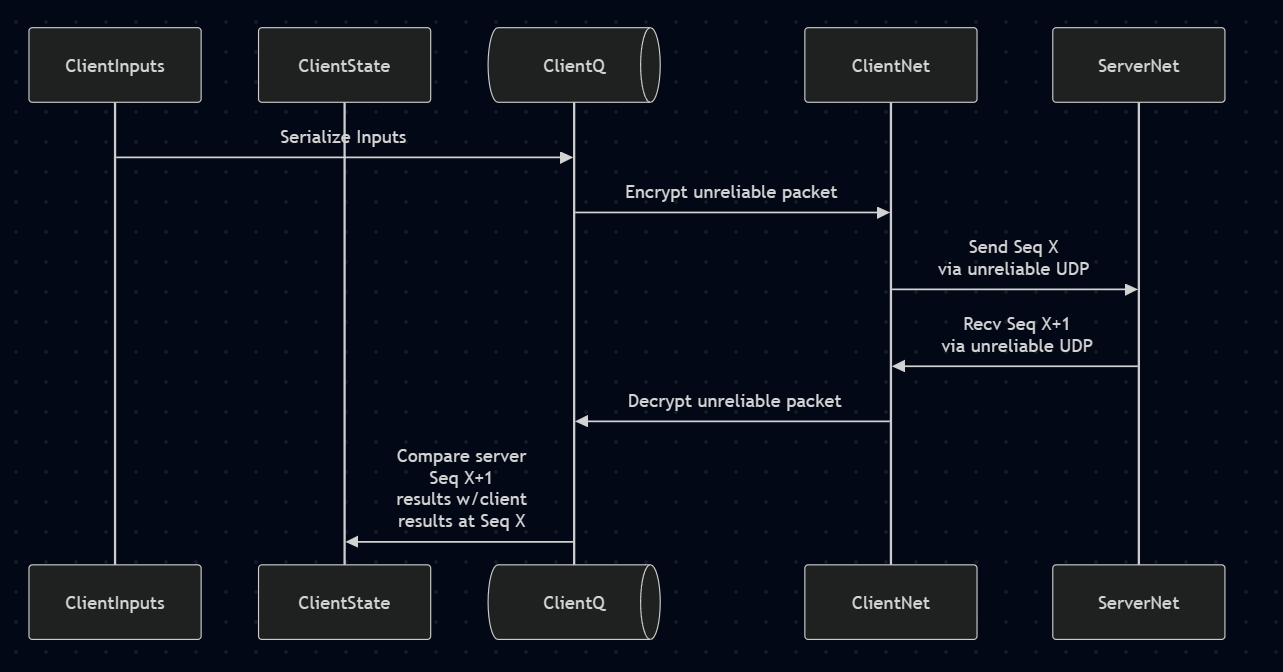

Let’s review our current design before we dig into how to optimize it. There’s quite a bit lot going on for both the clients (click to see larger mermaid chart) & server (mermaid chart).

Client

Our client sends its inputs via an unreliable UDP channel, each input packet contains a differential history of the last inputs from the last acknowledged server sequence id. Meaning if we are sending out sequence Id 10, and the last ack we got from the server was 7, we’d send the inputs 8,9 and now 10. This way if any of our packets gets dropped along the way, the server can reconstruct all events leading up to the last packet IT saw.

We then process our unreliable UDP channel’s incoming packets where we get in some sequence id greater than whatever we just sent out. After decrypting and deserializing it from our network processing thread, we then push the deserialized packet into our queue to be read by the main game loop thread.

We have two state buffers, a client state buffer, and a server state buffer. Both of these hold a shared StateBuffer object which we use for tracking sequence id acknowledgements, and their associated position, velocity and rotation values. This is so we can compare what the client physics predicted with what the server predicted and returned to us. Since the client is already “in the future” we need to have a historical series of values for each frame between where we are now in the client and where we are when the server sent the result back to us.

If they disagree, we set a network::PhysicsDescyned component on the player so our physics system can roll the player back. OK so that’s the client point of view, which honestly we don’t care TOO much about here, except that all of these updates occur in a single 1024 byte packet. Let’s do a quick refresh of the server aspect of this

Server receives a packet from the client over the unreliable UDP channel, we decrypt it in our network thread and put it into our packet queue. The first, and only processing this frame of that packet is putting it into our JitterBuffer. This jitter buffer exists for each player and is used to collect a few packets from them to handle cases where we start getting packet loss.

If we DO have dropped packets, we read in that players historical inputs and try to ‘fill in the blanks’ of the packets that were dropped. This allows us reduce the chance of the player being desynchronized because we lost an action or input that would put them in different places (client thinks it pressed move forward, server says it didn’t, so server tries to move the player back).

OK so for that frame we actually have another system that processes the ‘head’ of the jitter buffers packet data. This pop’s the client’s packets data that was sent a few frames back and processes it. (This basically means the server is running in the past).

Let’s say this packet the server processes moves the character forward and into a new map chunk/cell. This action will update the Area of Interest (AoI) for that player and any players within the same cell. Processing the cell’s inhabitants we put which players can see which other players in their own unique vector of neighbors.

With our AoI updated, we now need to serialize this state and send to the player it’s list of neighbors. Some of these neighbors may be new, requiring us to send LOTS of data, and some may be old, requiring us to only send a delta of what changed since the last packet went out. A new player can require almost 120-160 bytes of data, where as an update may only require us to send 20 or so bytes (if they haven’t moved for instance).

We take this serialized state and push it onto our queue from our game thread to be processed by the network thread. The network thread pops it off the queue, creates a new network message, increments the sequence id by 1, and sets inside what the last sequence id IT saw from that particular client.

Right now this serialized state that contains the players details AND neighbors details has to fit within a single 1024 byte packet. This obviously isn’t going to scale for an MMORPG.

Some quick math

Our player updates contain a lot of information, way too much:

- All players around us (in our AoI)

- Their equipment, 13 possible slots where each one has an item id (uint32) and the type (uint8). So that’s 5 * 13 bytes = 65 bytes for fully equipped.

- Their position (vec3, 3 floats) so 12 bytes

- Their rotation (quat4, 4 floats) so 16 bytes

- Their velocity (vec3, 3 floats) so 12 bytes

- Their attributes (health/mana/shield etc) (4 floats) so 16 bytes

- Their traits (5, 1 byte each) so 5 bytes (we probably don’t need to send this)

- Their current action, 1 byte

- Their entity id (uint64) so 8 bytes

- Entity type (player) 1 byte

- Damage events from this player (they are the instigator)

- Damage class (enum) 1 byte

- Target entity id (uint64) 8 bytes

- Amount of damage (float) 4 bytes

- Total: 13 bytes

- Total for a fully equipped player: 136 bytes + (N * Number of outbound damage events)

- The player itself:

- Their attributes (health/mana/shield etc) (4 floats) so 16 bytes

- Their traits (5, 1 byte each) so 5 bytes (again, probably don’t need to send this, can be done in an RPC)

- Their position (vec3, 3 floats) so 12 bytes

- Their rotation (quat4, 4 floats) so 16 bytes

- Their velocity (vec3, 3 floats) so 12 bytes

- Their damage events

- Total: 61 bytes + (N * Number of outbound damage events)

Now of course those are worse case scenarios, we aren’t sending that much data every frame, just for new players or when things ‘change’. However it does NOT include the flatbuffers overhead for tables.

To be extra conservative we need to fit everything into a single 1024 byte packet. Why 1024 bytes? I have some overhead with my flatbuffers data, as well as my encryption nonces, overall we want to be below the Maximum Transmission Unit (MTU) size (1500 bytes). We do not want our packets getting fragmented or dropped without us having full control over it, so to be safe we limit ourselves to a pretty small buffer. Crazy that this hasn’t changed in like 40 years…

So we have preettty small buffers and a new player can cost us 136 bytes. That gives us room for only 7 new players per packet.

Yikes. That isn’t going to work in an MMO. What options do we have?

We have quite a few actually and despite this already being a long post, what we will be covering here!

Quantization/Compression

Most of you probably saw my player data sizes and scoffed that I am not quantizing my floats! I agree, I should be, but I worked for 5 months to get my damn client rollback code working, the last thing I want to do now is potentially introduce issues converting quantized float values. (I will at some point, I just don’t want to fight with physics and rollback right now). If you are unfamiliar with quantization, check out my other post on floats, movement and quantization.

I could also do some bit smooshing/compression which I may also implement at a later date, but compression ain’t gonna smash 100 players data into a single packet.

We’re gonna have to look at alternative strategies.

Fragmenting our messages

The obvious solution here is that we will need to send multiple packets per player, possibly per tick. I have fragmentation working for reliable UDP packets, but not for unreliable packets. We really don’t want to be creating player updates with reliable packets because they maybe out of date by the time we get them. So we can’t use our reliable channel’s fragmentation, what about our unreliable channel?

Well, no. Because we have sequence id’s that are tightly coupled between client & server state, if we start sending a ton of packets per frame our sequence id’s will be a nightmare to manage.

Luckily, I’ve abstracted out my netcode where I have the concept of a “Peer”. This peer object is created for each game session and contains the reliable/unreliable channels. All we need to do is add a new UnreliableOverflow channel as a type and create the new endpoint in our peer object!

Now those overflow sequence id’s can be separate from our primary player update channel, allowing us to stuff all additional players into additional packets.

Distance and culling

Another optimization is determining distance and culling those who are out of range. Our current AoI uses Jolt sensors (collision boxes) that are created for each UE5 landscape “chunk”. Where a chunk is just the FBX mesh that I exported from the landscape tool. Each of the landscape chunks are 126 by 126 meters. We have two layers of sensors. The local sensor which the player currently occupies (so 126mx126m). Then the multi-sensor which surrounds the player in a 3×3 (378mx378m) series of chunks.

As the player moves in between the sensors, they are added or removed from other players inside of those sensors. What we probably want to do is setup priority levels of players depending on their distance. So we’d put players that are 50 meters away in one zone, then players just outside of that (say 150m) into another, then another group up to around 250m then anything after that we just simply cull.

We can also cull players that are behind us, so we will calculate the players rotation and determine which neighboring players are in view and outside that initial 50m zone.

But what about big PvP battles? This is usually where games shit the bed, at least the ones I’ve played. The few that didn’t completely throw up that I’ve seen (never played) is throne and liberty and Lineage 2.

What usually ends up happing is this, demonstrated by WoW classic. Slideshows. Nothing breaks the immersion more than everyone standing in place with their walking animations running.

Keep in mind a lot ways games get away with having lots of players on screen is by trusting the client data (such as visibility, location information etc.) so they have less to calculate / send out. I obviously will not do that, everything must be server authoritative because cheaters are not someone I want to do favors for.

One thing that can really assist here is forcing a camera perspective. All of those games allow you to zoom out pretty far, which increases the draw area that we need to concern ourselves with. With my game I purposely chose an over the shoulder 3rd person view so we can at least limit to ONLY what the player currently see’s, meaning it should be quite safe to cull players behind us.

This camera angle thing is also why top down games like Albion online can be really strict in what they draw or able to cull, your field of view and distance viewable is tiny compared to first / 3rd person.

With these priority zones we can also limit what exactly we send, A player 250m away we probably don’t need to send the client the entire equipment stack. Maybe we only send pos/rotation? And just calculate a local velocity as a ‘guess’ of interpolation. This is almost like a Level of Detail (LoD) but for network assets.

Putting it all together

So I think what I’ll be doing over the next few weeks is implementing these features. Starting with creating that unreliable overflow channel. Next I’ll work on creating the distance / view checks per player and create the zone concepts.

Given these zones we have another trick we can do, simply not send all zone updates every tick. We can round robin them, keeping track of which players had updates sent and when to send the next batch. This should give us a bit more leeway and allow us to slowly roll through updates without the server having to send out 7-10 packets PER player, per tick.

Because…

The server constraints

I honestly think my Jolt physics engine is going to be the largest bottle neck, but we also need to concern ourselves with the absolutely massive amounts of packets per second (pps) we’ll need to be sending out. Let’s go back to some math, and assume we have a battle with 100 players going on in a relatively small area.

Let’s say we don’t do any real culling or zones, but we do want to split up our players into packet sizes that will fit.

Let’s say each player is already rather visible so we are only sending the 60-70 or so bytes per player. That’s 7000 bytes of player updates to send out, or about 8 packets, including our player update packet. That means we have a total of 800 packets per tick, where a tick is 33ms (30hz). That brings us to 24,000 packets per second.

Server throughput

24,000 packets * 7000 bytes = 168,000,000 or 1344 Megabits per second. That’s a lot of data, 434 TiB a month of data. Basically, no way of hosting this in GCP, they will GOUGE you in egress pricing. Thankfully there are better, dedicated services specifically designed for game hosting that should only cost a few thousand dollars even for 25Gbps and they are completely unmetered.

syscalls

As we scale past 100 players we will introduce another possible bottleneck, the massive amount of syscalls we have to do. Each one of our sendto function calls requires a syscall into the kernel. These are expensive as they have to switch context from userland/kernel land (storing/retrieving state).

Thankfully Unix based systems have an answer to this in the form of sendmmsg. This will allow us to send a number of messages in a single system call, greatly reducing the latency between user/kernel land. Another possibility is using io_uring, but i’m not sure I’ll need to go that far, sendmmsg seems like the best/easiest solution to the syscall problem.

Other options

There are other optimization techniques of course (dynamic server meshing etc) but I’m not sure I want to go through the trouble of trying to implement that until I can at least get 100 players on screen with my current setup. If you have any other ideas, please let me know over on mastodon!

And that’s it!

I’ll start with adding the new channel, add the zones, figure out a priority based system for sending updates in a round robin style fashion, and then see what kind of compression/quantization I could do on my data structures to further minimize the packet sizes.